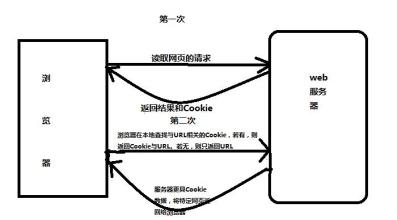

先看下大家都了解的登录网站方式:

# -*- coding: utf-8 -*-

# !/usr/bin/python

import urllib2

import urllib

import cookielib

import re

auth_url = 'http://www.nowamagic.net/'

home_url = 'http://www.nowamagic.net/';

# 登陆用户名和密码

data={

"username":"nowamagic",

"password":"pass"

}

# urllib进行编码

post_data=urllib.urlencode(data)

# 发送头信息

headers ={

"Host":"www.nowamagic.net",

"Referer": "http://www.nowamagic.net"

}

# 初始化一个CookieJar来处理Cookie

cookieJar=cookielib.CookieJar()

# 实例化一个全局opener

opener=urllib2.build_opener(urllib2.HTTPCookieProcessor(cookieJar))

# 获取cookie

req=urllib2.Request(auth_url,post_data,headers)

result = opener.open(req)

# 访问主页 自动带着cookie信息

result = opener.open(home_url)

# 显示结果

print result.read()这种方法有只能进入只有用户名和密码验证的网站,如果这个网站有多重验证就不行了。下面给出几种情况:

1. 使用已有的cookie访问网站:

import cookielib, urllib2

ckjar = cookielib.MozillaCookieJar(os.path.join('C:\Documents and Settings\tom\Application Data\Mozilla\Firefox\Profiles\h5m61j1i.default', 'cookies.txt'))

req = urllib2.Request(url, postdata, header)

req.add_header('User-Agent', \

'Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1)')

opener = urllib2.build_opener(urllib2.HTTPCookieProcessor(ckjar) )

f = opener.open(req)

htm = f.read()

f.close()

2. 访问网站获得cookie,并把获得的cookie保存在cookie文件中:

import cookielib, urllib2

req = urllib2.Request(url, postdata, header)

req.add_header('User-Agent', \

'Mozilla/4.0 (compatible; MSIE 6.0; Windows NT 5.1)')

ckjar = cookielib.MozillaCookieJar(filename)

ckproc = urllib2.HTTPCookieProcessor(ckjar)

opener = urllib2.build_opener(ckproc)

f = opener.open(req)

htm = f.read()

f.close()

ckjar.save(ignore_discard=True, ignore_expires=True)3. 使用指定的参数生成cookie,并用这个cookie访问网站:

import cookielib, urllib2

cookiejar = cookielib.CookieJar()

urlOpener = urllib2.build_opener(urllib2.HTTPCookieProcessor(cookiejar))

values = {'redirect':", 'email':'abc@abc.com',

'password':'password', 'rememberme':", 'submit':'OK, Let Me In!'}

data = urllib.urlencode(values)

request = urllib2.Request(url, data)

url = urlOpener.open(request)

print url.info()

page = url.read()

request = urllib2.Request(url)

url = urlOpener.open(request)

page = url.read()

print page

- 本文固定链接: http://ttfde.top/index.php/post/316.html

- 转载请注明: admin 于 TTF的家园 发表

《本文》有 0 条评论